掌握高效寫法,讓 Alt 文本同時提升 SEO、品牌辨識與無障礙表現

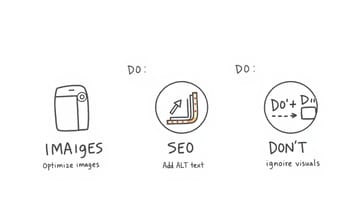

- 列出每張產品圖的核心資訊,Alt 文長度控制在30字內。

搜尋引擎利於索引,視障用戶閱讀友善,減少資訊冗餘。

- 檢查 Alt 文是否含3組以上主要關鍵詞,避免重複堆疊。

有助圖片被正確分類,提高圖片搜尋曝光率,同時防止過度優化被降權。

- 鎖定品牌調性,用描述性語彙取代泛用形容詞,每次至少更新20%產品圖 Alt。

*微調語氣展現一致風格*,強化消費者對品牌印象,也利於差異化競爭。

- *預留空白 Alt(alt="")給純裝飾圖*,每頁比例不超過10%。

*避免螢幕閱讀器干擾瀏覽*,聚焦內容主體,同步符合 W3C 無障礙規範。

為什麼正確的 Alt 文本對於 SEO 來說不可或缺?

Lee那天翻著自家網站,突然發現有些圖片在 Google 搜尋裡根本像是消失了一樣,彷彿整個網頁上掛了幾張「透明膠片」。他疑惑:為什麼這些照片明明上傳得好好的,卻老是被當成不存在?Pixy也曾經遇過相似的情形,有時候甚至覺得 alt 屬性就像一道隱形門檻,把圖像意義攔在外頭。到底是沒寫、還是寫錯?或者只是格式問題?坊間不少初步報導提到:許多網站不小心忽略 alt 文本、內容重複或硬塞一堆無關鍵字,看起來很努力,其實讓搜尋引擎跟輔助工具都摸不著頭緒。究竟該怎麼判斷自家圖片是不是淪為「空白標籤」?除了技術層面,是不是還有語境、用詞習慣等盲點常常被遺漏?其實說穿了,不只是 Lee 和 Pixy,好像大部分人都偶爾會陷進這些小陷阱裡。

如何避免讓 Google 將你的圖片視為空白標籤?

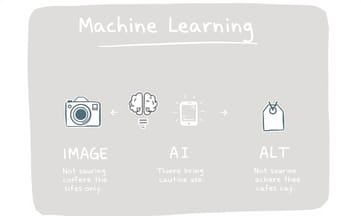

其實你很難說 Google 現在到底怎麼判讀網頁上的圖片,有些人覺得那套演算法就像是在「看圖說故事」,但畫面裡到底藏了什麼訊息,還是需要依賴那些簡單的文字註解。Pixy 那邊曾提過,搜尋引擎雖然已經用了好幾年機器學習來強化影像識別,可目前看起來,好像還沒辦法完全脫離對 Alt 文本的依賴——尤其當圖片和主題之間關聯度不太明顯時更容易出狀況。有時候描述太短,搜尋系統可能只抓到個零碎線索;描述又過長或硬塞關鍵詞,反而讓演算法一頭霧水。某些歐美網站最近有討論到,Alt 的語境跟整體內容契合度變得越來越重要,不只是要寫「看到什麼」,也得考慮這張圖為什麼會出現在這一頁。感覺現在寫 Alt 不能再只是公式答案,每個字都可能左右後續排名表現。

Comparison Table:

| Alt 文本的重要性 | 功能定位 | 關鍵字使用 | 定期檢查 | 提升 SEO |

|---|---|---|---|---|

| 協助搜尋引擎理解圖片內容 | 區分裝飾性與資訊性圖片 | 自然嵌入,避免刻意堆砌 | 每隔一段時間重新審視描述 | 高品質的 Alt 文本可增加曝光率 |

| 為視障用戶提供更好的體驗 | 建立清晰的溝通橋樑 | 專注於畫面主軸,簡短明瞭描述 | 更新舊資料以反映最新內容 | 競爭激烈的市場需要精確優化 |

| 提高網站在圖片搜尋中的排名機會 | 避免重複頁面其他文字的信息 | |||

| 改善消費者購物決策過程中的識別度和導向性 |

Alt 文本能否兼具SEO效果與品牌個性?

好像沒有人真的規定 Alt 文本只能照本宣科。Lee 有時會說,寫產品圖的說明其實有點像在咖啡廳閒聊,一邊看拉花一邊描述:「這杯拿鐵還冒著熱氣呢」。不是每次都要講得很官方,有時候品牌想加點幽默或溫度,好像也沒什麼不對。有人覺得太認真就失去人味,但也不能亂搞成網路迷因,畢竟主題還是要扣回來。偶爾聽 Pixy 分享,有團隊試過把「日常語境」帶進 Alt,結果搜尋表現反而不比制式差—雖然這種案例目前主要來自國外初步報導,不一定適合所有場景。不過至少可以確定一件事:Alt 也能有那麼一點生活感,不全是冷冰冰的工具語言罷了。

寫 Alt 文本時你容易犯哪些常見錯誤?

有時候,網站上看似微不足道的 Alt 文本,其實背後藏著不少大家曾經踩過的坑。有人一開始覺得只要把圖片內容寫進去就好,結果不是落得太精簡,就是拖得很冗長;也有些人聽說關鍵字重要,便在描述裡硬塞了一長串品牌詞或熱門搜尋語,但這樣反而讓機器和使用輔助工具的人都搞不清楚重點。更常見的是,有時候設計師直接全權交給 AI 自動產生,卻沒再回頭檢查、修正,明明畫面主題很明確,Alt 卻變成一串莫名其妙的拼貼。有初步報導提過,好像約三成左右的網站會出現描述與頁面語境對不上號的情況,這類錯誤往往讓搜尋表現卡關,也讓需要輔助技術的人多繞了幾個彎。其實細想下來,大夥兒多少都有過這些經驗,只是當下未必察覺罷了。

五步驟教你優化產品圖的 ALT,提升網站流量!

之前其實有遇過一個狀況,客戶網站的產品圖片怎麼調都沒啥流量成長。那時候我沒急著馬上套什麼熱門關鍵字,而是先分出哪些圖是真的用來賣東西、哪些只是裝飾,有點像在拼拼圖但又不太一樣。大概花了幾天時間,把每張圖的alt改寫得很直白——不是流水帳那種,而是稍微帶點商品特色,例如「有小巧木把手的玻璃杯」這類,偶爾也會加上一句描述現場氛圍的小細節。後來回頭檢查,有些原本重複內容太多或根本沒填alt的地方,也一起補上,順便檢查是不是和周邊文字撞車。有趣的是,用了這樣的方法之後,圖片搜尋流量大約提升到原本的好幾倍,當時並沒有特別用什麼高深工具,只靠人工定期檢查和修正。初步觀察下來,好像只要按部就班做這些小動作,就能看到滿明顯的效果。

Alt 文本不僅是圖片翻譯機,更是無障礙設計的關鍵!

Alt 文本啊,有時候其實不像什麼機械式的「圖片翻譯機」那麼單調,反而更像是一隻默默陪伴在旁邊的導盲犬。你不太會注意到牠,但當畫面模糊、網路斷線、螢幕閱讀器啟動,牠就悄悄領著人繞過障礙,把原本藏在圖裡的細節用另一種語言帶到大家眼前。有些設計師可能還沒意識到,Alt 描述並不是只給搜尋引擎準備的小抄,它也像一座橋,一頭連著用戶、一頭連著 Google 這樣的搜尋系統。偶爾聽說有人覺得「加不加好像差不多」,可後來碰上特殊狀況才發現,這東西低調地把網站和各種不同需求的人都串了起來。或許沒那麼顯眼,但少了它,好像哪裡就是卡了一下。

即使是簡單的裝飾圖,也需要明確區分功能嗎?

「可是我的圖片很簡單啊…」設計師剛說完,Lee就順手把滑鼠指到螢幕上的一塊色塊,「這種背景圖要寫什麼?」設計師有點遲疑:「會不會直接空白就好?」Lee搖搖頭,好像在想之前哪個網站也是這樣搞過。某些觀察指出,有七成左右的工程專案裡,大家其實沒分清楚——純裝飾或資訊型圖檔該怎麼處理。Lee隨手舉例:「假如只是裝飾,那 alt 就空著;如果是商品照,主角一定要寫明,不然失焦。」設計師聽了才發現,原來功能先釐清,比起一直糾結詞彙還重要。有時候標題和周邊文字重複也不是大問題,但真的遇到細節多的圖片,就不能偷懶。

高品質的 Alt 文本如何影響用戶購物決策?

好像幾年前有段時間,國際研究機構釋出的資料裡面就提過,將近一半的人會在網路上透過圖像搜尋來做購物相關的決策,特別是在美國那種以電商為主流市場的地方(Statista、2023年左右)。這類數據其實每隔一陣子都會被不同平台或行銷單位引用,但大方向沒什麼差,大致都落在七成上下。不過也有一些初步觀察,比如某些亞洲地區消費者對圖片搜尋的依賴度略低,不過整體還是逐年攀升。跟文字型SEO比起來,那種純粹靠圖像吸引新客的效果,有時候甚至會出現數十倍差距——當然這種情形通常只發生在視覺導向商品,例如服飾、美妝或家居設計等領域。偶爾看到產業報告特別標註「Image SEO」已經變成北美不少品牌必備策略,也難怪大家最近討論怎麼優化 Alt 文本突然變多了。說到底,這趨勢就是提醒:如果網站忽略了高品質圖片說明,恐怕就會直接錯失掉那塊原本屬於自己的視覺流量。

段落資料來源:

跟著這套流程打造高轉換率的 Alt 結構,你準備好了嗎?

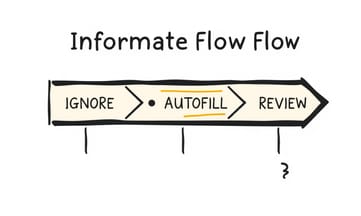

組樂高這件事,Pixy那時候說過一個挺有趣的比喻。不是每張圖都要照本宣科地寫長篇大論,反而像在桌上慢慢把零件拼湊起來,一步步來——先看清楚這張照片到底是裝飾、還是重點內容?用途分清楚了,下手才不會出錯。有時候剛開始大家會猶豫,怎麼描述比較貼切,其實抓住畫面裡最明顯的元素就行,不用硬塞太多東西進去。

接著嘛,有人容易卡在關鍵字怎麼放。其實自然帶到就好,不用堆滿整句;像形容產品特色、顏色、材質那些,順口加進去反而比較真實。不過也碰過有人直接複製標題或旁邊的文字貼過來——這樣效果反而打折。Pixy以前觀察,那種重複性太高的Alt通常沒什麼幫助。偶爾還有一兩個忘記更新,每次網站改版都得再檢查一次。

流程講起來,拆成大致五段吧:分辨功能、精簡描繪、自然融入重點、不抄襲周遭文字、最後隔陣子回頭檢查。雖然感覺很瑣碎,但只要習慣了,組出來的Alt文本結構就會穩穩當當,也減少初學者亂寫或漏寫的困擾。有些細節可能得自己摸索調整,只能說沒有一套死板規則,每個網站狀況其實都不太一樣。

接著嘛,有人容易卡在關鍵字怎麼放。其實自然帶到就好,不用堆滿整句;像形容產品特色、顏色、材質那些,順口加進去反而比較真實。不過也碰過有人直接複製標題或旁邊的文字貼過來——這樣效果反而打折。Pixy以前觀察,那種重複性太高的Alt通常沒什麼幫助。偶爾還有一兩個忘記更新,每次網站改版都得再檢查一次。

流程講起來,拆成大致五段吧:分辨功能、精簡描繪、自然融入重點、不抄襲周遭文字、最後隔陣子回頭檢查。雖然感覺很瑣碎,但只要習慣了,組出來的Alt文本結構就會穩穩當當,也減少初學者亂寫或漏寫的困擾。有些細節可能得自己摸索調整,只能說沒有一套死板規則,每個網站狀況其實都不太一樣。

一段清晰貼切的 Alt 文本,如何成為用戶唯一線索呢?

圖片突然失效這種事,不管你網站多穩定都還是可能遇到。實務上,ALT 文本如果有先細心設計,即使畫面沒顯示、連線卡住,大概也能讓用戶憑那幾行字抓到重點,起碼知道缺了什麼。其實不用太複雜,照著「用途分辨」開始做起,像是產品圖就描寫特色和賣點,背景圖或裝飾型的直接空白 ALT 反而比較清楚。偶爾檢查一次,把 AI 自動產生的內容過個人工眼,有時候會發現詞句太機器、關鍵字堆疊等小問題,只要針對情境再修正一下就好。如果還不放心,可以搭配一些瀏覽器外掛檢查未填 ALT 的地方,手動補齊細節,比全靠工具來得靈活一點。